testing to be resumed around march 23th

history:

Initial discussion about this was on my thread in blurbusters (flood's input lag measurements), but seeing how much interest there is in noacc's thread, and how some people are requesting additional measurements/testings for various settings, I thought I'd post here as well.

Anyway, inspired by the various measurements around the internet of input lag performed using a cheap high-speed camera, I embarked to replicate these with my own such setup. So I got a casio ex-zr700, which is capable of 1000fps at tiny resolution, and started taking apart my g100s... and managed to accidentally short and fry its pcb :(. As I did not want to buy another one and take it apart, attempt to solder, etc..., I decided to forgo the button-click-to-gun-fire measurements, and instead simply measure motion lag by slamming my dead g100s onto my new g100s, and seeing how many video frames it took to see a response on my screen. The results were quite promising and I could measure with precision and accuracy around 1-2ms, limited by the fact that i recorded at 1000fps, and that it is difficult to determine the exact frame where the mouse begins movement. But it was just tedious slamming the mice together and counting video frames.

So I got an LED, a button, and an Arduino Leonardo board, which is capable of acting as a USB mouse, to automate the mouse slamming. With it, I can just press a button to make the "cursor" instantly twitch up to 127 pixels in any direction, and at the same time, an LED would light up. This made things a lot easier when scrolling through the video frames, but still it was quite tedious and I never made really more than 20 measurements from a single video clip.

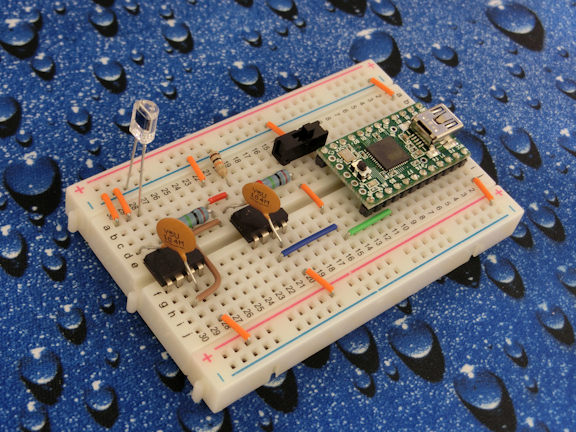

A few days ago, Sparky, in the blurbusters thread, suggested I use a photodetector on the arduino to replace the video camera and thus automate the measurements. At first, I was reluctant and doubted that it would allow more precision than the high speed cam, but soon I realized that due to CRT's low persistence and the phosphor's fast rise time, actually it would work very well... so a few days of messing with electronics led to this thing:

current microsecond measuring setup:

last updated 2015 feb 12

hardware:

http://i.imgur.com/flwNG6q.jpg

https://docs.google.com/spreadsheets/d/1ckteh...=728266876

software:

<in progress>

(anyone good with electronics and/or arduino, please let me know if there's anything to be improved/fixed)

how it works:

When i slide a switch, the program begins taking measurements. It takes ~10 measurements a second.

Each measurement is done by staring at a dark part in-game, twitching the cursor so that the screen goes to a bright part, waiting and measuring how long it takes for the photodiode to response, and twitching the cursor back.

There is a bit of randomness in the spacing between each measurement so that the measurements aren't happening in sync with the framerate, which would likely give biased results that are consistently higher or lower than the average of a several independent measurements.

Here is all data so far:

https://docs.google.com/spreadsheets/d/1ckteh...5CAQ-Zpkk/

preliminary conclusions:

default fps_max 100 adds between 0-10ms of input lag (expected behavior) over the lag with uncapped framerate of ~2000fps

raw input doesn't affect cs1.6

If you want be to test anything, let me know here...

Things I have available for testing:

me:

lots of knowledge of physics

basic knowledge of electronics

measuring equipment:

a 1000fps camera (casio ex-zr700)

an arduino leonardo

a teensy 2.0

various simple electronics for interfacing with photodiodes

computers/hardware:

a z97 computer, i7 4770k @ 4.6ghz

an x58 computer, i7 920 @ 3.6ghz

a gtx 970 (nvidia reference model from best buy)

a gtx 460 768mb (galaxy brand)

intel hd4000 lol

windows 7, 8.1, xp (if you want), linux (need to update 239562 things tho)

a laptop (thinkpad x220, 60hz ips screen) with win7, xp, linux (gentoo)

monitors:

two crt monitors (sony cpd-g520p, sony gdm-fw900)

2 lcd monitors (old shitty viewsonic tn from ~2009, asus vg248qe with gsync mod)

mice:

logitech g3, g100s, g302, ninox aurora, wmo 1.1

FAQ:

1. How much does this entire thing cost?

you can get everything except the teensy from digikey.

total cost is about about $50 after shipping

here's a list with links to a digikey shopping cart with what's needed:

https://docs.google.com/spreadsheets/d/1ckteh...=728266876

note: this setup doesn't work as well on LCDs due to persistence. The photodiode, unless you set some ridiculous gain, will only respond once a significant portion of the screen is lit. Whereas for a CRT, due to the fast rise time of the phosphors, as soon as 1 row is lit, the photodiodes will get a response as strong as when the entire screen is lit. so if you want to replicate my stuff, you should get some cheap crt.

2. How can you measure lag less than the refresh period? i.e. how do you measure < 5ms at 60hz???

CRTs are rolling scan, which means except in the vblank period (~10% of the refresh cycle), the screen is constantly updated from top to bottom. Since the photodiode is placed in a way that it is sensitive to changes in any part of the screen, this means that the input lag of any event is unrelated to refresh rate, so long as the framebuffer update doesn't occur during the vblank interval.

3. If the computer and arduino is perfect, with the only limitation being that the game runs at XYZ fps, how much input lag would be measured?

(frame rendering time) <= input lag <= (frame rendering time) + (time between frames)

picture explanation: http://i.imgur.com/9cSP1bM.png

(frame rendering time) is the time taken to run the cpu+gpu code and is equal to the the inverse of the uncapped framerate

(time between frames) is the actual time between when frames start to get rendered and is equal to the inverse of the actual framerate.

So for instance, if my game runs at 2000fps uncapped but I cap it to 100fps, I expect, at the very best, to see a uniform distribution of input lag between 0.5ms and 10.5ms

If my game runs at 50fps uncapped and I don't cap it, I expect, at best, to see a uniform distribution of input lag between 20ms and 40ms.

4. How does mouse polling rate affect input lag?

still need to figure this out. but from first principles it must be that there is at least between 0 and 1/polling rate of input lag, since input can occur anywhere in between polls. depending on the mouse firmware, it could be more

5. How much input lag can I feel? what does 10ms of input lag feel like?

try for yourself with this: http://forums.blurbusters.com/viewtopic.php?f=10&t=1134

My personal threshold is around 10-16ms. One osu player managed to barely pass the test for 5ms!!!11!1!11

For full screen movement like in 3d games one's threshold may be lower.

6. What amount of input lag is 100% guaranteed to be insignificant in that no human can feel it, and no one's performance will be affected at all?

This is not something easy to measure since amounts of lag that you can't feel in a blind test could still affect performance... but I believe it is between 1 and 5ms. I'd guess that anything less than 2ms is absolutely insignificant.

One thing that I keep in mind is that in quite a few top-level cs:go lan tournaments, the monitors used were eizo fg2421's which are documented to have ~10ms more input lag than other 120+ hz tn monitors such as the asus vg248qe, benq xl24**z/t, etc... And no one was suddenly unable to hit shots or anything. But they all played against each other on that monitor, so who knows :P

Another thing is that when tracking objects on a low persistence monitor, it is possible to detect (with your eyes) the difference between a setup with constant input lag and a setup with input lag that fluctuates by +/-1ms. see http://www.blurbusters.com/mouse-125hz-vs-500hz-vs-1000hz/ . But I don't know of any game/situation where this affects performance.

TODO, in order of priority:

measurements:

nvidia driver versions, scaling, whatever

csgo measurements

-mixing vsync, fps_max, and max prerendered frames << IN PROGRESS

-fps_max vs external framerate caps

-"triple buffering" in nvidia settings

-raw input and rinput.exe

-multicore on/off, -threads #, mat_queue_mode << half done

-various graphics settings

windows pointer measurements

other games

repeat interesting/controversial results from above with old computer, gtx 460

bios setting, hpet, whatever mythbusting

future stuff:

mouse measurements with motion detection somehow. maybe even connect the mouse directly to the arduino so that there are no contributions from the computer.

Edited by ewh at 20:16 CDT, 20 March 2015 - 74663 Hits